Generative artificial intelligence (genAI) like ChatGPT has to date mostly made its home in the massive data centers of service providers and enterprises. When companies want to use genAI services, they basically purchase access to an AI platform such as Microsoft 365 Copilot — the same as any other SaaS product.

One problem with a cloud-based system is that the underlying large language models (LLMs) running in data centers consume massive GPU cycles and electricity, not only to power applications but to train genAI models on big data and proprietary corporate data. There can also be issues with network connectivity. And, the genAI industry faces a scarcity of specialized processors needed to train and run LLMs. (It takes up to three years to launch a new silicon factory.)

“So, the question is, does the industry focus more attention on filling data centers with racks of GPU-based servers, or does it focus more on edge devices that can offload the processing needs?” said Jack Gold, principal analyst with business consultancy J. Gold Associates.

The answer, according to Gold and others, is to put genAI processing on edge devices. That's why, over the next several years, silicon makers are turning their attention to PCs, tablets, smartphones, even cars, which will allow them to essentially offload processing from data centers — giving their genAI app makers a free ride as the user pays for the hardware and network connectivity.

"I have data that I don’t want to send to the cloud — maybe because of cost, maybe because it’s private and they want to keep the data onsite in the factory…or sometimes in my country." -- Bill Pearson, vice president of Intel’s network and edge group.

GenAI digital transformation for businesses is fueling growth at the edge, making it the fastest-growing compute segment, surpassing even the cloud. By 2025, more than 50% of enterprise-managed data will be created and processed outside of the data center or cloud, according to research firm Gartner.

Intel

Intel

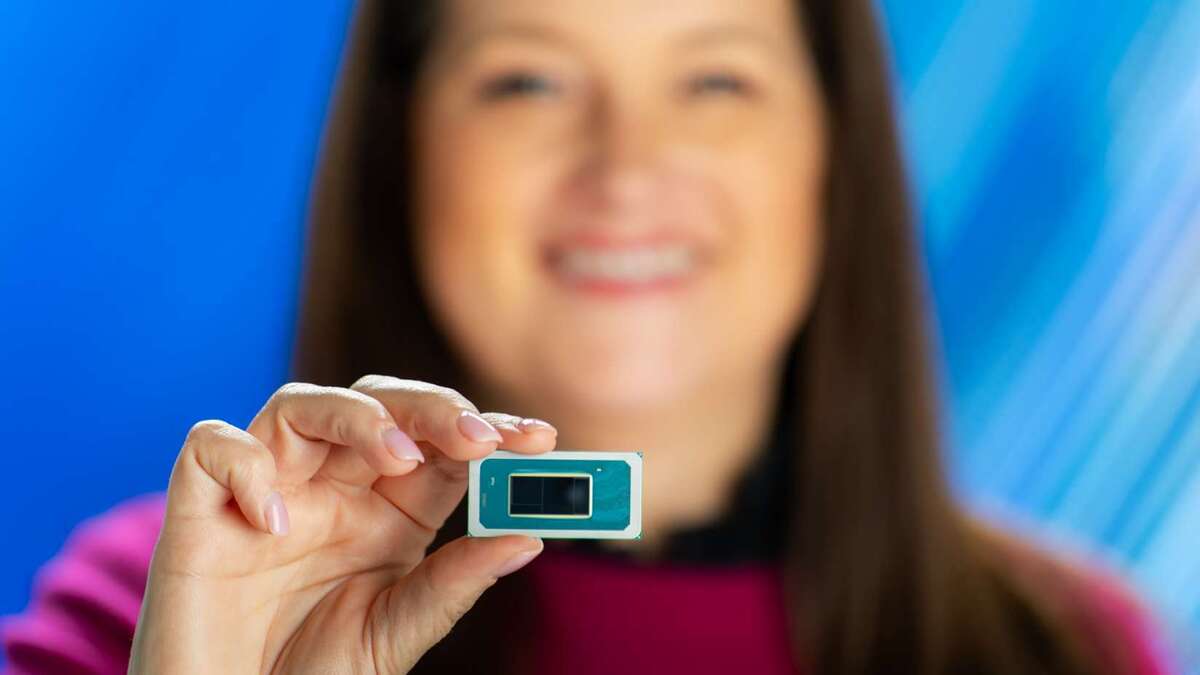

Michelle Johnson Holthaus, general manager of Client Computing at Intel, holds the Core Ultra mobile processor with AI acceleration for endpoint devices.

Microprocessor makers, including Intel, AMD, and Nvidia, have already shifted their focus toward producing more dedicated SoC chiplets and neuro-processing units (NPUs) that assist edge-device CPUs and GPUs in executing genAI tasks.

Coming soon to the iPhone and other smartphones?

“Think about the iPhone 16, not the iPhone 15, as where this shows up,” said Rick Villars, IDC’s group vice president for worldwide research. Villars was referring to embedded genAI like Apple GPT, a version of ChatGPT that resides on the phone instead of as a cloud service.

Apple GPT could be announced as soon as Apple’s Worldwide Developers Conference in June, when Apple unveils iOS 18 and a brand new Siri with genAI capabilities, according to numerous reports.

Expected soon on those iPhones (and smartphones from other manufacturers) are NPUs on SoCs that will handle genAI functionality like Google’s Pixel 8 “Best Take” photo feature; the feature allows a user to swap the photo of a person’s face with another from a previous image.

“Those processors inside a Pixel phone or an Amazon phone or an Apple phone that ensure you never take a picture where someone isn’t smiling because you can retune it with five other photos and create the perfect picture — that’s great [for the consumer],” Villars said.

A move in that direction allows genAI companies to shift their thinking from an economy of scarcity, where the provider has to pay for all the work, to an economy of abundance, where the provider can safely assume that some key tasks can be handled for free by the edge device, Villars said.

The release of the next version of — perhaps called Windows 12 — later this year is also expected to be a catalyst for genAI adoption at the edge; the new OS is expected to have AI features built in.

The use of genAI at the edge goes well beyond desktops and photo manipulation. Intel and other chipmakers are targeting verticals such as manufacturing, retail, and the healthcare industry for edge-based genAI acceleration.

Retailers, for instance, will have accelerator chips and software on point-of-sale systems and digital signs. Manufacturers could see AI-enabled processors in robotics and logistics systems for process tracking and defect detection. And clinicians might use genAI-assisted workflows — including AI-based measurements — for diagnostics.

Intel claims its Core Ultra processors launched in December offer a 22% to 25% increase in AI performance throughput for real-time ultrasound imaging apps compared to previous Intel Core processors paired with a competitive discrete GPU.

“AI-enabled applications are increasingly being deployed at the edge,” said Bryan Madden, global head of AI marketing at AMD. “This can be anything from an AI-enabled PC or laptop to an industrial sensor to a small server in a restaurant to a network gateway or even a cloud-native edge server for 5G workloads.”

GenAI, Madden said, is the “single most transformational technology of the last 50 years and AI-enabled applications are increasingly being deployed at the edge.”

In fact, genAI is already being used in multiple industries, including science, research, industrial, security, and healthcare — where it's driving breakthroughs in drug discovery and testing, medical research, and advances in medical diagnoses and treatment.

AMD adaptive computing customer Clarius, for instance, is using genAI to help doctors diagnose physical injuries. And Japan’s Hiroshima University uses AMD-powered AI to help doctors diagnose certain types of cancer.

“We are even using it to help design our own product and services within AMD,” Madden said.

A time of silicon scarcity

The silicon industry at the moment has a problem: processor scarcity. That’s one reason the Biden Administration pushed through the CHIPS Act to reshore and increase silicon production. The administration also hopes to ensure the US isn’t beholden to offshore suppliers such as China. Beyond that, even if the US were in a period of processor abundance, the chips required for generative AI consume a lot more power per unit.

“They’re just power hogs,” Villars said. “A standard corporate data center can accommodate racks of about 12kw per rack. One of the GPU racks you need to do large language modeling consumes about 80kw. So, in a sense, 90% of modern corporate data centers are [financially] incapable of bringing AI into the data center.”

Intel, in particular, stands to benefit from any shift away from AI in the data center to edge devices. It's already pitching an “AI everywhere” theme, meaning AI acceleration in the cloud, corporate data centers — and at the edge.

AI applications and their LLM-based platforms run inference algorithms, that is, they apply machine learning to a dataset and generate an output. That output essentially predicts the next word in a sentence, image, or line of code in software based on what came before.

NPUs will be able to handle the less-intensive inference processing while racks of GPUs in data centers would tackle the training of the LLMs, which feed information from every corner of the internet as well as proprietary data sets offered up by companies. A smartphone or PC would only need the hardware and software to perform inference functions on data residing on the device or in the cloud.

Intel’s Core Ultra processors, the first to be built using the new Intel 4 core process, made its splash powering AI acceleration on PCs. But it’s now heading to edge devices, according to Bill Pearson, vice president of Intel’s network and edge group.

“It has CPU, GPU, and NPU on it," he said. "They all offer the ability to run AI, and particularly inference and accelerate, which is the use case we see at the edge. As we do that, people are saying, 'I have data that I don’t want to send to the cloud' — maybe because of cost, maybe because it’s private and they want to keep the data onsite in the factory…or sometimes in my country. By offering compute [cycles] where the data is, we’re able to help those folks leverage AI in their product.”

Intel plans to ship more than 100 million processors for PCs in the next few years, and it's expected to power AI in 80% of all PCs. And Microsoft has committed to adding a number of AI-powered features to its Windows OS.

Apple has similar planns; in 2017, it introduced the A11 Bionic SoC with its first Neural Engine — a part of the chip dedicated and custom-built to perform AI tasks on the iPhone. Since then, every A-series chip has included a Neural Engine — as did the M1 processor released in 2020; it brought AI processing capabilities to the Mac. The M1 was followed by the M2, and just last year, the M3, M3 Pro, and M3 Max — the industry’s first 3-nanometer chips for a personal computer..

Each new generation of Apple Silicon has added the ability to handle more complex AI tasks on iPhones, iPads, and Macs with fastermore efficient CPUs and more powerful Neural Engines.

“This is an inflection point for new ways to interact and new opportunities for advanced functions, with many new companies emerging,” Gold said. “Just as we went from CPU alone, to integrated GPU on chip, nearly all processors going forward will include an NPU AI Accelerator built in. It's the new battleground and enabler for advanced functions that will change many aspects of software apps.”

Apple

Apple

Apple's lastest AI-enabled M3 chip, released in 2023, came with a faster Neural Engine. Each new generation of Apple’s chips allows devices to handle more complex AI tasks.

AMD is adding AI acceleration to its processor families, too, so it can challenge Intel for performance leadership in some areas, according to Gold.

“Within two to three years, having a PC without AI will be a major disadvantage," he said. "Intel Corporation is leading the charge. We expect that at least 65% to 75% of PCs will have AI acceleration built-in in the next three years, as well as virtually all mid-level to premium smartphones.”

For an industry fighting headwinds from weak memory prices, and weak demand for smartphone and computer chips, genAI chips provided a growth area, especially at leading manufacturing nodes, according to a new report from Deloitte.

"In 2024, the market for AI chips looks to be strong and is predicted to reach more than $50 billion in sales for the year, or 8.5% of the value of all chips expected to be sold for the year," the report stated.

In the longer term, there are forecasts suggesting that AI chips (mainly genAI chips) could reach $400 billion in sales by 2027, according to Deloitte.

The competition for a share of the AI chip market is likely to become more intense during the next several years. And while numbers vary by source, stock market analytics provider Stocklytics estimates the AI chip market raked in nearly $45 billion in 2022, $54 billion in 2023.

"AI chips are the new talk in the tech industry, even as Intel plans to unveil a new AI chip, the Gaudi3,” said Stocklytics financial analyst Edith Reads. “This threatens to throw Nvidia and AMD chips off their game next year. Nvidia is still the dominant corporation in AI chip models. However, its explosive market standings may change, given that many new companies are showing interest in the AI chip manufacturing race."

OpenAI's ChatGPT uses Nvidia GPUs, which is one reason it is getting the lion’s share of market standings, according to Reads.

“Nvidia’s bread and butter in AI are the H class processors," according to Gold.

“That’s where they make the most money and are in the biggest demand,” Reads added.

AI edge computing alleviates latency, bandwidth, and security issues

Because AI at the edge ensures computing is done as close to the data as possible, any insights from it can be retrieved far faster and more securely than through a cloud provider.

“In fact, we see AI being deployed from end points to edge to the cloud,” AMD’s Madden said. “Companies will use AI where they can create a business advantage. We are already seeing that with the advent of AI PCs.”

Enterprise users will not only take advantage of PC-based AI engines to act their data, but they’ll also access AI capabilities through cloud services or even on-prem instantiations of AI, Madden said.

“It’s a hybrid approach, fluid and flexible," he said. "We see the same with the edge. Users will take advantage of ultra-low latency, enhanced bandwidth and compute location to maximize the productivity of their AI application or instance. In areas such as healthcare, this is going to be crucial for enhanced outcomes derived through AI."

There are other areas where genAI at the edge is needed for timely decision-making, including computer vision processing for smart retail store applications or object detection that enables safety features on a car. And being able to process data locally can benefit applications where security and privacy are concerns.

AMD has aimed its Ryzen 8040 Series chips at mobile, and its Ryzen 8000G Series for desktops with a dedicated AI accelerator – the Ryzen AI NPU. (Later this year, it plans to roll out a second-generation accelerator.)

AMD’s Versal Series of adaptive SoCs allow users to run multiple AI workloads simultaneously. The Versal AI Edge series, for example, can be used for high-performance, low-latency uses such as automated driving, factory automations, advanced healthcare systems, and multi-mission payloads in aerospace systems. Its Versal AI Edge XA adaptive SoC and Ryzen Embedded V2000A Series processor is designed for autos; and next year, it plans to launch its Versal AI Edge and Versal AI Core series adaptive SoCs for to space travel.

It’s not just about the chips

Deepu Talla, vice president of embedded and edge computing at Nvidia, said

genAI is bringing the power of natural language processing and LLMs to virtually every industry. That includes robotics and logistics systems for defect detection, real-time asset tracking, autonomous planning and navigation, and human-robot interactions, with uses across smart spaces and infrastructure (such as warehouses, factories, airports, homes, buildings, and traffic intersections).

“As generative AI advances and application requirements become increasingly complex, we need a foundational shift to platforms that simplify and accelerate the creation of edge deployments,” Talla said.

To that end, every AI chip developer also has introduced specialized software to take on more complex machine-learning tasks so developers can more easily create their own applications for those tasks.

Nvidia’s designed its low-code TAO Toolkit for edge developers to train AI models on devices at the “far edge.” ARM is leveraging TAO to optimize AI runtime on Ethos NPU devices and STMicroelectronics uses TAO to run complex vision AI for the on its STM32 microcontrollers.

“Developing a production-ready edge AI solution entails optimizing the development and training of AI models tailored to the specific use case, implementing robust security features on the platform, orchestrating the application, managing fleets, establishing seamless edge-to-cloud communication and more,” Talla said.

For its part, Intel created an open-source tool kit called OpenVINO; it was originally embedded in computer vision systems, which at the time was largely what was happening at the edge. Intel has since expanded OpenVINO to operate multi-modal systems that include text and video — and now it’s expanded to genAI as well.

“At its core was customers trying to figure how to program to all these different types of AI accelerators," Intel’s Pearson said. "OpenVINO is an API-based programming mechanism where we’ve strapped the type of computing underneath. OpenVINO is going to run best on the type of hardware it has available. When I add that into the Core Ultra..., for example, OpenVINO will be able to take advantage of the NPU and GPU and CPU.

“So, the toolkit greatly simplifies the life of our developers, but also offers the best performance for the applications they’re building,” he added.